Here is a little Sunday project for those who may be watching the video feeds of FOSDEM AI tracks (or any other track) or just want to try something fun (your definition of fun may vary) with open software that can be used in a secure and private manner (at least less riskier and controlled). This is AI for beginners and takes about 30mins (depending on how fast your internet is). [edit to add: you’ll need at least about 1.3GB space for this, app+model]

Preamble: AI is relative in the sense that there are many sizes. There is some connection between the size of the model and quality but it’s not absolute. The efficiency aspect related to size is important due to the limited hardware, computing power of L5 - or really any mobile device. Bigger the model, the more you need room in eMMC, memory and CPU to use it (because there’s no usable GPU or neural processor). But bigger is better only if you want a jack-of-all-trades (good, but master of none, as the saying goes). Using specific models that are developed for specific tasks, is much more efficient and they can be relatively small. And there are even some general models (“chatbots”/GPTs) that are ok - with some limitations. It’s amazing how many different models there are openly available and the multitude of variations. The L5 is by no means meant for this but let’s not let that discourage us, even if even the smaller models are slow with this hardware. Usability is a matter of what you need it for and if you can find (by trial and error) a suitable AI model - try to find the size that fits best (not perfect). And really, yes, let’s acknowledge that being able to run an AI completely on the client side, in offline, on L5, at any speed is a wonder.

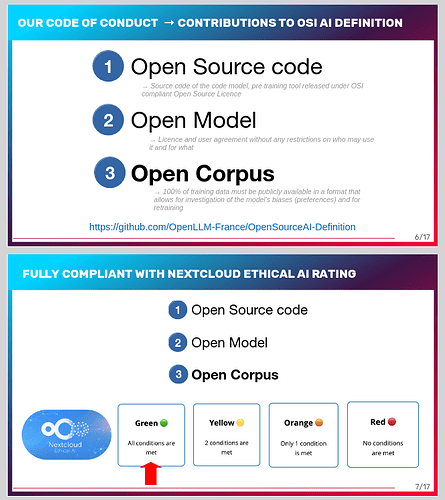

Outline: Many here (those that are interested in the security, privacy and freedom that L5 affords us) are a bit vary of AI. Usually either because the AI applications are online-services, run by who-knows or known-entities-most-here-would-not-like-to-have-anything-to-do-with, or because setting up AI on your own on your own hardware seems way too complicated. I’m introducing here ridiculously simple solution to both challenges - and I can not take any credit of it. Ollama (ollama.ai) has been made as simple as possible to install and use and after loading a model to test, you can kill your web connection while you use it, if you want.

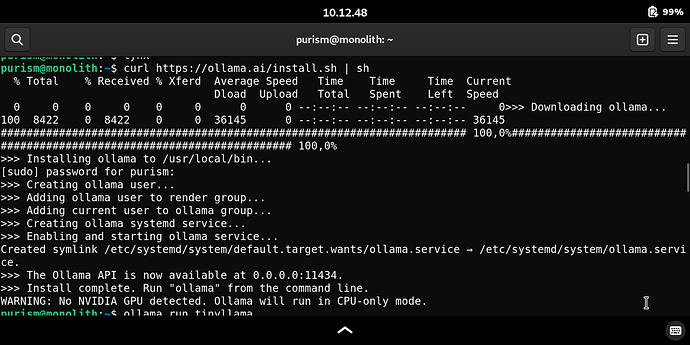

Installation: Two alternatives…

- Automated, at console, simply:

curl https://ollama.ai/install.sh | sh - Manual, see github (also has uninstallation instructions)

Use: First time, you need to load one of the available models (or an available variation that has “tags”, listed in a tab) presented at the library. There are instructions at the github site for creating more, editing your own, creating webservices and using APIs etc.

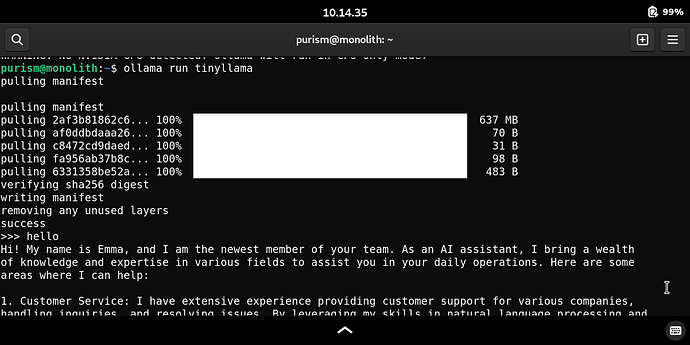

Let’s start ollama and get one of the smaller general models: ollama run tinyllama

[edit to add: just so you know, this is based on Meta’s work. An alternative small(ish) model available is dolphin-phi (ollama run dolphin-phi), which has it’s roots with M$ but has MIT licence - see this post]

It loads about 640MB (compared to models around 2GB, 4GB, 30GB or more) and starts a prompt with the model - ask something (like “hello” - which may give interesting answers). Note however that it may take several minutes to get an answer - kinda like the AI was thinking and painstakingly formulating an answer. It’s using 100% of all CPU cores and about 40% of memory (no noticeable heat in that time).

A bit more on that inside the hidden section.

An interesting command is “/set verbose” that shows statistics after each answer. Below you can see the whole answer to my greeting and how long it took for tinyllama model to create an answer in L5.

>>> /set verbose

Set 'verbose' mode.

>>> hello

Hi! My name is Emma, and I am thrilled to be part of your team. As an AI assistant, I bring a wealth

of knowledge and expertise to the table in various areas that can benefit your business operations.

Here are some specific areas where I can assist you:

1. Customer Service: As a skilled customer support professional, I am well-versed in using natural

language processing (NLP) tools to handle customer inquiries, resolving issues, and improving

overall customer experience. By leveraging my expertise in this field, you can expect better

customer service with more accurate information, quicker resolution times, and better outcomes.

2. Data Analysis: My extensive experience in data analysis and machine learning has enabled me to

identify patterns, trends, and insights that can help you make informed decisions about your

business. By leveraging this knowledge, I can provide actionable insights for optimization of

operations, sales, and marketing campaigns.

3. Artificial Intelligence: As a certified deep learning expert, I am qualified to work with

AI-based applications like chatbots, predictive analytics, and machine learning models. By using

these tools, you can save time and improve decision making processes, leading to increased

efficiency and better customer outcomes.

4. Artificial Intelligence: Machine vision is a particularly important area where I can contribute

my expertise in data analytics, image processing, and computer vision. With this knowledge, I can

help optimize operations, increase productivity, and improve safety at your facility or

manufacturing plant.

If there's anything else that you would like me to assist with, please let me know. My goal is to

provide value by utilizing my skills in various AI-based applications to enhance your business

operations while ensuring customer satisfaction. Thank you for considering my application, and I

hope to work with you soon!

total duration: 4m12.34526173s [<-- time, how long it took to print the answer]

load duration: 1.651861ms

prompt eval count: 280 token(s)

prompt eval duration: 1m31.456934s

prompt eval rate: 3.06 tokens/s

eval count: 404 token(s)

eval duration: 2m40.874333s

eval rate: 2.51 tokens/s```

So, asking right and specific questions is key. There are some options for you to work with. I’m not going into all the settings, options or various models - perhaps this forum thread is a place where we can share what we find.

One more thing: To manage the installed models, you can delete them with ollama rm <name of model>. Various ollama commands can be seen in terminal with ollama --help and various model related commands come up at prompt with /? (like, ending an AI chat session is /bye). Since you control your copy of the model, you can trust not to leak your chat history with it but /set nohistory may also help (or just change model behavior).

Final words: ollama can be used on your desktop as well. But be aware that models gobble all available CPU of a mid-tier laptop (don’t worry, so far I’ve noticed these to be pretty stable and getting an answer just takes time - I got a couple of errors but in each case running the command again solved the situation), so using hardware with a good GPU is recommended. In any case, have fun - perhaps get a laugh when the AI hallucinates it’s answers or try one of the uncensored models.

Oh, and by the way: you now have an AI in you pocket, on your L5.

[edit to add: the list of available models (variations under “tags”), also - for desktops - there is the option for using docker containers (with CPU only, if you want)]