I got my librem about ~3 weeks ago and thought to summarize my experience for the portion of Qubes users after the latest release was marked stable. I’m pleased overall with the hardware and my setup, despite the price and will here give my overall summary of everything from a hardware/firmware and OS interaction.

Hardware: Librem 15v3, TPM version + HyperX Kingston DDR4 16GB RAM + a SATA Samsung SSD

BIOS/ME firmware: latest coreboot 4.7-Purism-4, flashed per kararoto’s script (initially with ver. 4.7-Purism-3)

Xen: 4.8.3-series current-testing latest

Linux Kernel (dom0): 4.9.56-21

Initial testing was done on a old 3.2 installation, after which a fresh 4.0-stable install was made

The Good:

- the laptop comes with some nice packaging and mini instructions for things like battery care

- the laptop disassembly is easy, provided one is careful with the screwdriver

- battery time seems decent to me on all systems I tested so far (Q3.2, Q4.0, Linux Mint, PureOS), including suspend, it seems that some people have issues though, so maybe they hit a bad series of battery parts

- the latest firmware fixes the loud fan (from ver. Purism-3), now it only revvs when appropriately under load

- cooling in general seems correct now, the system does not have spikes or unexpected shutdowns

- no general power issues, suspend was always behaving right

- VT-d/SLAT and TPM function as expected

- USB, including passthrough and sys-usb functions as expected, but no-strict reset has to be set on the controller (easy to do from the settings menu) and there is a regression for 4.14+ as well here for some USB devices (usbip issues, not for mass storage) - loading a 4.9 VM kernel on sys-usb resolves the issue (https://github.com/QubesOS/qubes-issues/issues/3628)

- HDMI works correctly, although 4.4 is the last to catch modelines right and 4.9+ kernels will need manual settings for Hi-Res Monitors under Xorg

- Dual-band WiFi card performs well, with some distance to the router and several walls in-between

- Touchpad (incl. gestures), Camera, Mic, Bluetooth (over USB, proprietary firmware), Headphone Jack, USB-C, SD/MMC, Killswitches, FN keys all work without a hitch

- HVMs and PVH modes work without any issues, Qubes in general is responsive under load (video, multiple VMs, disk backups etc.)

The Bad:

- the PureOS installer of the system which came on the Biwin SSD drive was broken - computers are my job, but normal people would be stuck here which is a bad customer experience

- the hwclock (qvm-sync-clock uses it for VM clock setting) system sync is broken for linux 4.14/4.15

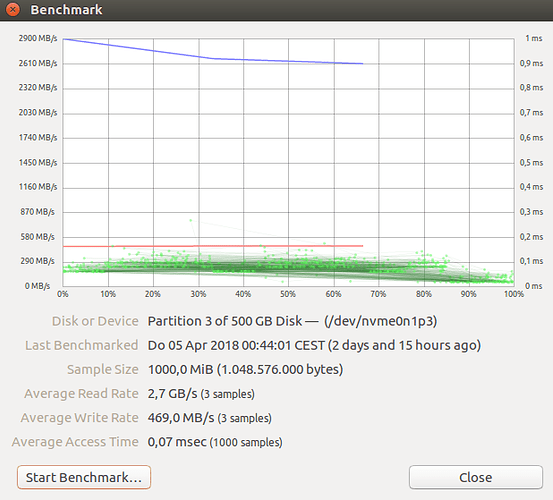

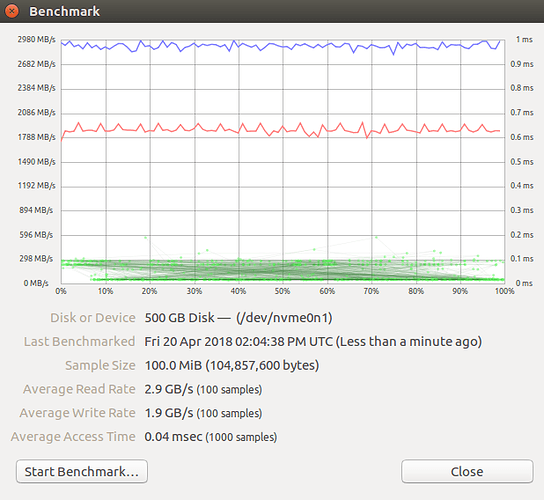

4.9 and 4.4 behave as expected, with 4.9 being much better due to Intel graphics improvements - SATA is limited to 3Gbps instead of 6Gbps because of firmware issues (see kakaroto’s posts) which is a bit on the slow side for larger backups even with TRIM, NVMe is an option though it that limits you

- Ethernet device (only shows up once it is connected) does not work correctly - as tested with USB-C connector exposing a RJ45 port with 4.9 and 4.14 kernels, the error are likely some bad USB high-speed driver interactions which are likely to get fixed in the future, still if you need this now, expect trouble (https://github.com/QubesOS/qubes-issues/issues/2594)

- EDIT: also experiencing CPU fan stuck at high speed sometimes which I originally did not find as the issue only is reproducible on occasion by suspend/resume. Another suspend/resume cycle fixes the annoyance, but it’s clearly firmware-related

The Ugly (not a must, but could be improved):

- disassembly instructions are quite bare-bones, if you haven’t done this much - watch some short guides to not damage some of the connectors (e.g. for the battery)

- no LED indicator on the laptop means that to make sure it suspends, you’ll want to hit that manually and then close the lid, lest it burns up from bad ventilation in your bag

- Qubes 3.2 and 4.0 in general have a slow boot-up time (proportional to boot-time VMs as well) and even slower shutdown due to some LUKS/LVM sync issues, on both SSDs tested over 2 laptops so it’s likely a OS bug I’m hitting

- PureOS iso’s really should get GPG signed hashsums, with the fingerprint/key available outside puri.sm servers - or at least be available from more than 1 location, which would be a good fail-over in case another server outage happens

Not tested:

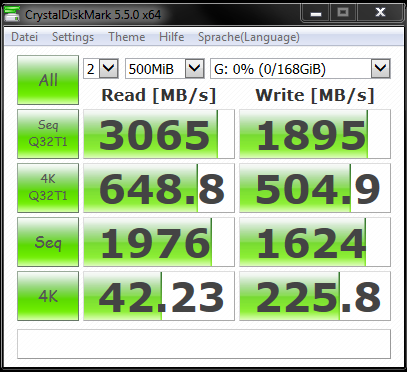

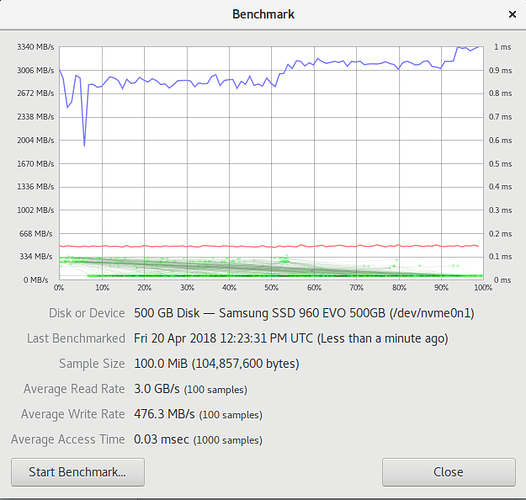

- NVMe M.2 device

I also commend Purism for building this nice forum which welcomes interactions with frequent responses from staff, good job!

Eagerly awaiting Heads support,

Happy customer