@reC, this is a really complex topic, which is why I consider it premature to think about optimizing it without any indication that there’s actually a problem.

In a simplified (!) overview (in general valid for all modern architectures / operating systems), the delay consists of three parts. Let’s call them System, Application, and Graphics.

System represents the delay from physically emitting a signal (touch, click, keypress), being processed by the kernel and delivered to the windowing system (X11, Wayland).

In general, this delay should be comparable for different input devices (touch, mouse, keyboard), at least if they are connected the same way (USB, not Bluetooth). This layer probably accounts for most of the delay, but a lot of it comes from the hardware itself (including connectivity, e.g. USB)

Application: the windowing system delivers the input to the application, which thus adapts it’s state and redraws the window content. This should be a very quick thing, <1ms.

(Except we’re talking about a javascripty, bloaty web page…)

Graphics: finally the delay until the changed content is visible on the screen. In general, this should also be very fast (<1ms), but it depends a lot on the actual implementation (DRI, DMA, compositing…).

Intrinsic delays

I briefely mentioned inherent delays that happen in the hardware (controller chip of the input device, plus USB bus etc.).

But all of the above layers have intrinsic delays that I did not mention, and that cannot easily be avoided. On a multi-core system with a reasonable CPU-load, every context switch takes (simplified) 0…10ms. That is: kernel(input) -> windowing system -> application -> compositing manager -> kernel(video). So, worst-case this could add up to 40ms, but usually should be significantly less. This can be tweaked in the kernel, but if there were only benefits to smaller time-slices, then it would obviously be the default.

Additionally, there is the screen refresh rate. At 60Hz, this adds a delay of 0…16ms.

So, 8ms on average, right?

Yes, but easily >30ms if multi-buffered.

That means that going from buffered to unbuffered can save you 30ms of input lag. But possibly somebody had a reason to enable it in the first place.

Here’s Bryan Lunduke - Keyboard lag sucks, talking about how and why systems built more than thirty years ago have less (!!!) input lag, despite of CPU clockspeeds thousand times slower than what we have nowadays.

Another interesting read: Why Modern Computers Struggle to Match the Input Latency of an Apple IIe.

It contains a link to the MS video I posted above, but more interestingly a link to this comparison table of different devices throughout the decades.

(Also adds a lot more detail, for example explains that the display itself can take like 10ms to make a white pixel black etc.)

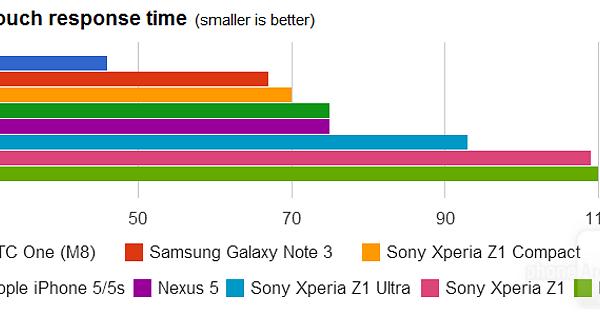

It seems to indicate that well designed Linux systems should have an input delay of 50…100ms.

That’s why I’m rather optimistic that the Librem 5 will not be worse than average Android devices.

My favourite developer quote is “All problems in computer science can be solved by another level of indirection”.

Input lag must be the exception to the rule. That’s why some people like to add:

“…except for the problem of having too many levels of indirection”.