Is it what you are looking for?

Looks cool - I have to say that I have no idea if this is what makes fde fast - as normally the CPU states “AES”. shrug At least it sounds good

I thought that the Fir batch was just considered to be L5v1 but souped up, while the batches before Evergreen are considered to be the L5v1 but souped down.

Why anybody absolutely needs 8 GB of RAM on a smartphone eludes me. I am on my Tuxedo Laptop now with MX-18 and have 16 GB of RAM some 14 of which are sitting unused while I have three applications up and running besides this browser.

Linux is rather frugal in its RAM utilization and my Laptop using 4 GB of RAM for multiple processes is not likely to be matched in any way by my smartphone use. Even if I were gaming, though I do not do so, unless I have quite a bit more going on, I doubt that I could use the 3 GB of RAM included here.

I dont know how much Linux programs will take RAM, but at now I have very old android smartphone with 2GB RAM and I never use more then 1.2Gb with torrent+browser+filemanager+mail at same time. May be heavy game need 2Gb or more RAM but I stopped playing 3D and big games.

Oh yes I mean efficiency at maximum performance

For me, it’s always my browser that, when I leave it on, uses ludicrous amounts of RAM. 3D rendering can also use absurd amounts of RAM with a large enough scene.

That’s why I said “should” not “does”.

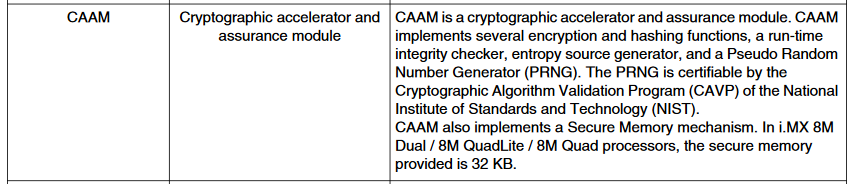

From the Wikipedia page on i.MX:

Standard Key Features: Advanced Security

but what does that mean? Advanced Encryption Standard (plus the instructions for SHA and GCM)?

It is difficult to know because AES is an optional capability on all ARM “A” series. It has defined behaviour but any given manufacturer may or may not implement it.

When someone actually gets a phone! …

grep -E 'aes|sha|pmull|crc' /proc/cpuinfo

Perhaps that is a risk of speculatively designing v2 when we don’t even have v1 yet. ![]()

I am keeping an open mind on how much RAM my typical use will need.

Convergence is not a big thing for me but once you get into that, RAM use is going to be beyond phone calls and random surfing.

I think dual sim will be unlikely also. As far as i understand the m.2 b-keg interface it only supports one sim (one usim interface) so to gave dual sim a modem with onboard sim and a second usim interface touted to the m.2 connector would be needed. Or a modem with an additional embedded sim. Both i don’t see coming from purism in the next year. Seams enough struggle to get modems which satisfy bands and no runtime firmware constraints. Thought they might have/had more room to figure this out then others as i think they have a custom m.2 module made for them. But i have like hope for an improved version of this. I believe they have to keep the cost down to get the money back the invested so far. And for that i would estimate 3-5 years after general availability. And keeping working parts the same is probably much cheaper than ordering and developing new board or wwan cards. So it has to be worth it. And don’t see dual sim making them that much money.

But i might be totally wrong. I personally have never meet some one with a dual sim or the need for it. But i heard its much more common in asia, and that is a potentially huge market. Thought purism seams to have more customers in US and EU from what i have seen her in the forum.

So i also stick with no dual sim. Only if someone finds a suitable m.2 modem.

Or the free to the firmeware 5g modem gets magically released some where.

Unfortunately, as long as the operators build each their own antenna network there will be problems with having any signal at all. I need two SIMs to have coverage in the forest where I work all winter. Stupid system.

I really hope that Librem 5 has a good antenna or else it will be in very restricted use - near the WiFi of our fiber net or when I go to town (happens seldom). There is no band available for your own base station either (although I know that Nokia makes small “personal” base stations).

I wonder if an improved external (possibly active) antenna could be a practical hack?

ARM claims that the Cortex-A35 is the most energy-efficient processor ever, with a target power consumption of no more than 125 milliwatts (mW), and it has already achieved 90 mW at 28nm process and 1 GHz frequency. So with the 14nm process, under the premise of constant or even lower power consumption, it is easy to exceed 2GHz. This leads myself to already available (R&D) NXP i.MX8QuadXPlus processor currently on 28 nm FD-SOI and therefore too big for Librem 5 v2 but with i.MX8QuadXPlus production hopes on 14nm process may shrink/change everything. This is of course just another spec-ulation that may work, yet not tomorrow. Expert insight is welcome, please.

Pure speculation on my part, but I would guess 220 grams, due to the larger circuit board and the two M.2 cards.

Hmm. If you double the clock frequency, you edit: double (not quadruple) the power drawn. So, 90 mW @ 1 GHz means 180 mW @ 2 GHz.

Power is also directly proportional to driving voltage squared (edit). Not sure how that changes with feature width, but assume you could cut it in half when going from 28 nm to 14 nm? If so, 65 mW @ 2 GHz and half the voltage.

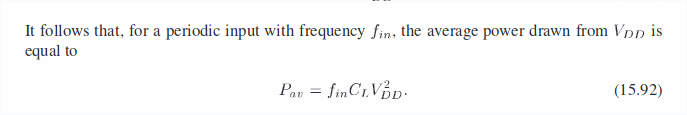

Edit: This is entirely theoretical, and based on

- P = U∙I (power equals voltage times current) and

- I = f∙(constant∙U) (frequency times charge, for a capacitive load)

- so P = U∙U∙f∙constant, or P ~ U²∙f

From my experience working with chips (I have only did simulations, never taped down). One generation of fabrication processes gives you ~30% power saving given the same design, minor clock boost and ~25% better density.

AFAIK, the standard A53 core design can never go above 1.8GHz (on modern process, due to it’s relatively few pipeline stages and long critical paths). And, the A53 core’s area can grow 30% from a low power design (~1.2GHz) to a high frequency design (~1.5GHz).

So, assume NXP didn’t go nuts with their layout. They can either build a smaller and less power hungry chip with the same design. Or they can build one with a higher frequency but same power efficiency and space. (Or they could put more devices on the chip)

Just my experience working in academia. I might be horribly wrong.

Citation please.

This comes from a formula in basic electronics. The power consumption of a transistor P = a f C Vdd^2 Where f is the frequency, a is the switching activity (so a*f is the average switching frequency),C is the effective capacitance and Vdd is the voltage.

Edit:

Fundamentals of Microelectronics (2007) by Behzad Razavi, page 802.

This formula is an ideal case, assuming a perfect CMOS gate with no leaking current. But that’s never the case.

So if you double the clock frequency, you ? the power drawn.

As you have quoted from lipu. In an ideal case:

In practice. it is complicated. The maximum frequency is determined not only by power, but also how fast the signal can propagate, etc… But doubling the frequency above what the vendor/circuit compiler gives you will take far, far more than 4x the power if not impossible.

Underclocking works the same way, some circuits are not stable when the clock speed is slow and you can’t underclock some timing sensitive devices (real-time cores, DRAM PHYs, PCIe(?)). So halving the CPU’s operation frequency will not give you 0.25x power draw.

OK, if you double the clock frequency, the device will most likely stop working.

However going by the formula you presented, power is proportional to frequency. Right?